5 Best Practices for Building Scalable Data Pipelines

Learn how leveraging PySpark for efficient processing and Kafka for real-time streaming can optimize your data workflows, ensuring reliability, fault tolerance, and high performance.

4/10/20253 min read

In today’s data-driven world, scalable data pipelines are essential for organizations to process and analyze large volumes of data efficiently. Tools like Apache Kafka and PySpark are particularly effective for building robust pipelines due to their distributed architectures and real-time processing capabilities. Below are five best practices to ensure your data pipelines are scalable, efficient, and reliable.

1. Design a Distributed and Modular Architecture

A solid architecture is the foundation of scalable data pipelines. Start by adopting a distributed system that allows horizontal scaling, such as Kafka for data ingestion and PySpark for processing.

Apache Kafka: Kafka’s distributed architecture enables efficient ingestion of streaming data from multiple sources. Its ability to scale horizontally by adding brokers and partitions ensures high throughput and low latency59.

PySpark: PySpark leverages Spark’s distributed computing framework to process large datasets in parallel across clusters. This ensures scalability for both batch and real-time processing

A modular architecture further enhances scalability by breaking the pipeline into independent components (e.g., ingestion, transformation, storage). This allows targeted scaling of specific parts without disrupting the entire pipeline.

2. Implement Real-Time Data Processing

Real-time processing is critical for applications requiring immediate insights, such as fraud detection or personalized recommendations. Combining Kafka and PySpark enables seamless real-time processing.

Kafka Streams: Use Kafka Streams for real-time transformations and aggregations on ingested data. This ensures low-latency processing while maintaining fault tolerance9.

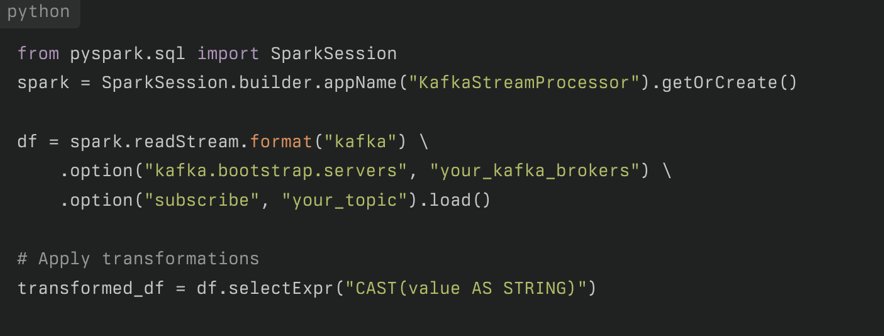

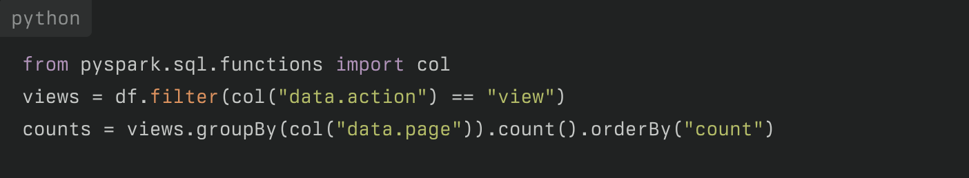

PySpark Streaming: PySpark’s readStream API allows you to consume Kafka topics in real-time and apply transformations such as filtering, aggregation, and sorting on streaming data

3. Optimize Data Processing Techniques

Efficiency is key to scalability. Optimize your pipeline by reducing unnecessary operations and leveraging distributed computing techniques.

Minimize Data Movement: Avoid pulling data into memory unnecessarily; instead, perform transformations directly on distributed datasets4.

Use SQL Queries: SQL-based transformations in PySpark are often more efficient than Python-based operations, making them easier to understand and maintain4.

Incremental Updates: Implement incremental processing to handle only new or changed data instead of reprocessing entire datasets7.

4. Leverage Parallel Processing

Parallel processing is essential for handling large volumes of data quickly. Both Kafka and PySpark are designed to support this.

Kafka Multi-Topic Design: Segregate streams into multiple topics based on attributes or logic to enable parallel ingestion and processing59.

PySpark RDDs: Spark’s Resilient Distributed Datasets (RDDs) allow distributed computations across clusters, ensuring fault tolerance and efficient resource utilization .

5. Monitor and Scale Dynamically

Continuous monitoring ensures the pipeline remains performant as data volumes grow.

Kafka Cluster Scaling: Add brokers or partitions dynamically to handle increasing message throughput. Optimize replication factors for fault tolerance without overloading resources9.

PySpark Resource Allocation: Allocate sufficient memory and CPU across nodes in the cluster to prevent bottlenecks during peak loads8.

Use monitoring tools like Prometheus or Datadog to track pipeline performance metrics such as latency, throughput, and error rates. Automated alerts can help address issues proactively before they impact operations17.

Conclusion

Building scalable data pipelines requires thoughtful design, efficient tools, and continuous optimization. By leveraging Apache Kafka for real-time ingestion and PySpark for distributed processing, you can create robust pipelines capable of handling growing data demands. Follow these best practices—distributed architecture, real-time capabilities, optimized processing, parallel execution, and dynamic scaling—to ensure your pipelines are future-proofed for evolving business needs.

Whether you're working with streaming IoT data or batch analytics for e-commerce platforms, combining Kafka’s fault-tolerant messaging system with PySpark’s high-performance computing will set you up for success in building scalable systems.

Crafting data-driven solutions for your success.

Quick Access

© 2025. All rights reserved.

Customer Feedback

Stay Connected